Reproducible deep learning with OpenML

Releasing OpenML Deep learning libraries compatible with keras, pytorch and mxnet.

Deep learning is facing a reproducibility crisis right now[1]. The scale of experiments and there are numerous hyperparameters that affect performance, which makes it hard for the author to write a reproducibility document. The current best way to make an experiment reproducible is to upload the code. However, that's not optimal in a lot of situations where we have a huge undocumented codebase and someone would like to just reproduce the model. OpenML[2] is an online machine learning platform for sharing and organizing data, machine learning algorithms and experiments. Until now we only provided support for classical machine learning and libraries like Sklearn and MLR. We see there is a huge need for reproducible deep learning now. To solve this issue OpenML is launching its deep learning plugins for popular deep learning libraries like Keras, MXNet, and Pytorch.

Here we have a small tutorial on how to use our pytorch extension with MNIST dataset.

Setup

To install openml and openml pytorch extension execute this instruction in your terminal

pip install openml openml_pytorch

!pip install openml openml_pytorch

Let's import the necessary libraries

import torch.nn

import torch.optim

import openml

import openml_pytorch

import logging

Set the apikey for openml python library, you can find your api key in your openml.org account

openml.config.apikey = 'key'

Define a sequential network that does initial image reshaping and normalization model

processing_net = torch.nn.Sequential(

openml_pytorch.layers.Functional(function=torch.Tensor.reshape,

shape=(-1, 1, 28, 28)),

torch.nn.BatchNorm2d(num_features=1)

)

print(processing_net)

Define a sequential network that does the extracts the features from the image.

features_net = torch.nn.Sequential(

torch.nn.Conv2d(in_channels=1, out_channels=32, kernel_size=5),

torch.nn.LeakyReLU(),

torch.nn.MaxPool2d(kernel_size=2),

torch.nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5),

torch.nn.LeakyReLU(),

torch.nn.MaxPool2d(kernel_size=2),

)

print(features_net)

Define a sequential network that flattens the features and compiles the results into probabilities for each digit.

results_net = torch.nn.Sequential(

openml_pytorch.layers.Functional(function=torch.Tensor.reshape,

shape=(-1, 4 * 4 * 64)),

torch.nn.Linear(in_features=4 * 4 * 64, out_features=256),

torch.nn.LeakyReLU(),

torch.nn.Dropout(),

torch.nn.Linear(in_features=256, out_features=10),

)

print(results_net)

The main network, composed of the above specified networks.

model = torch.nn.Sequential(

processing_net,

features_net,

results_net

)

print(model)

Download the OpenML task for the mnist 784 dataset.

task = openml.tasks.get_task(3573)

Run the model on the task and publish the results on openml.org

run = openml.runs.run_model_on_task(model, task, avoid_duplicate_runs=False)

run.publish()

print('URL for run: %s/run/%d' % (openml.config.server, run.run_id))

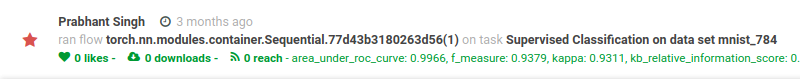

By going to the published URL you can check the model performance and other metadata

We hope that openml deep learning plugins can help in reproducing deep learning experiments and provide a universal reproducibility platform for the experiments. Here are the links of all supported deep learning plugins right now:

There are examples of how to use these libraries in the Github repos. These libraries are in the development stage right now so we would appreciate any feedback on Github issues of these libraries. Links: